-

Wednesday February 25, 2026

Any examples of apps with good first time experiences for VoiceOver users?

Any apps that have crafted a VoiceOver specific onboarding, rather than just making the visual onboarding accessible?

-

Friday December 19, 2025

person on the the street lighting a smoke through their Vision Pro

-

Monday November 10, 2025

Apple removed my app from the App Store for an intellectual property violation for using the word Siri in my app description to promote my App Shortcut.

-

Friday October 24, 2025

I’ve fallen into an App Review sarlacc pit and it’s getting bleak.

-

Thursday September 4, 2025

I’m presenting tonight at Xcoders Seattle! My talk is called “Tapping Into Visual Intelligence in iOS 26“. We’ll be wiring up an app integration into the system using App Intents, Core Video and Vision frameworks. xcoders.org/2025/09/0…

-

Tuesday June 17, 2025

Just got a response from a feedback I filed last September. The Siri logo has been added in the new SF Symbols 7 beta. Would they have done this anyway? Maybe! But I’ll take the mini win.

FB15004675

Please add SF Symbols to represent Siri. I would use this symbol to create my own user ed around utterances users can say as Shortcuts. -

Tuesday June 17, 2025

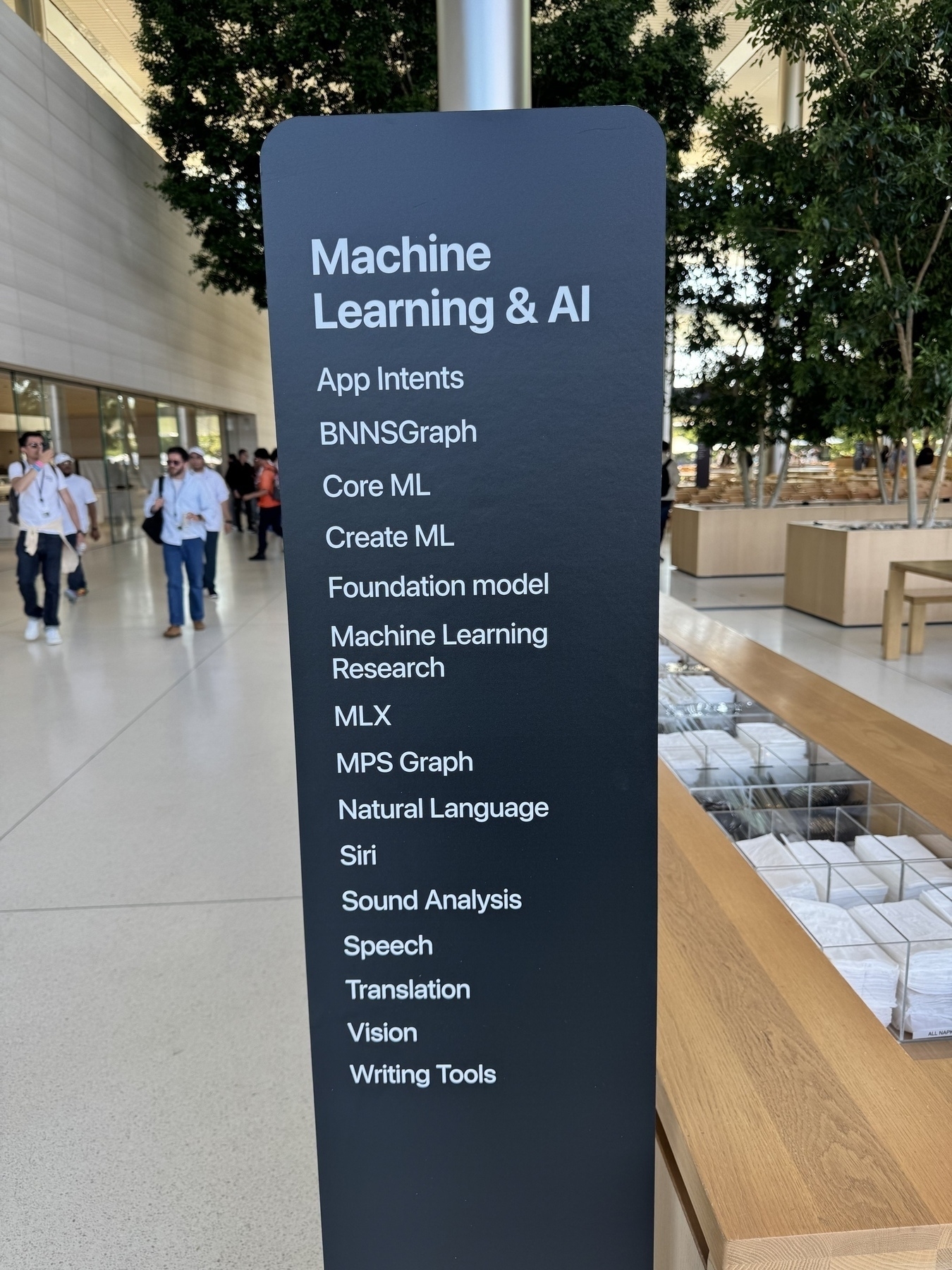

📍Apple Park, Cupertino, WWDC25 -

Tuesday April 8, 2025

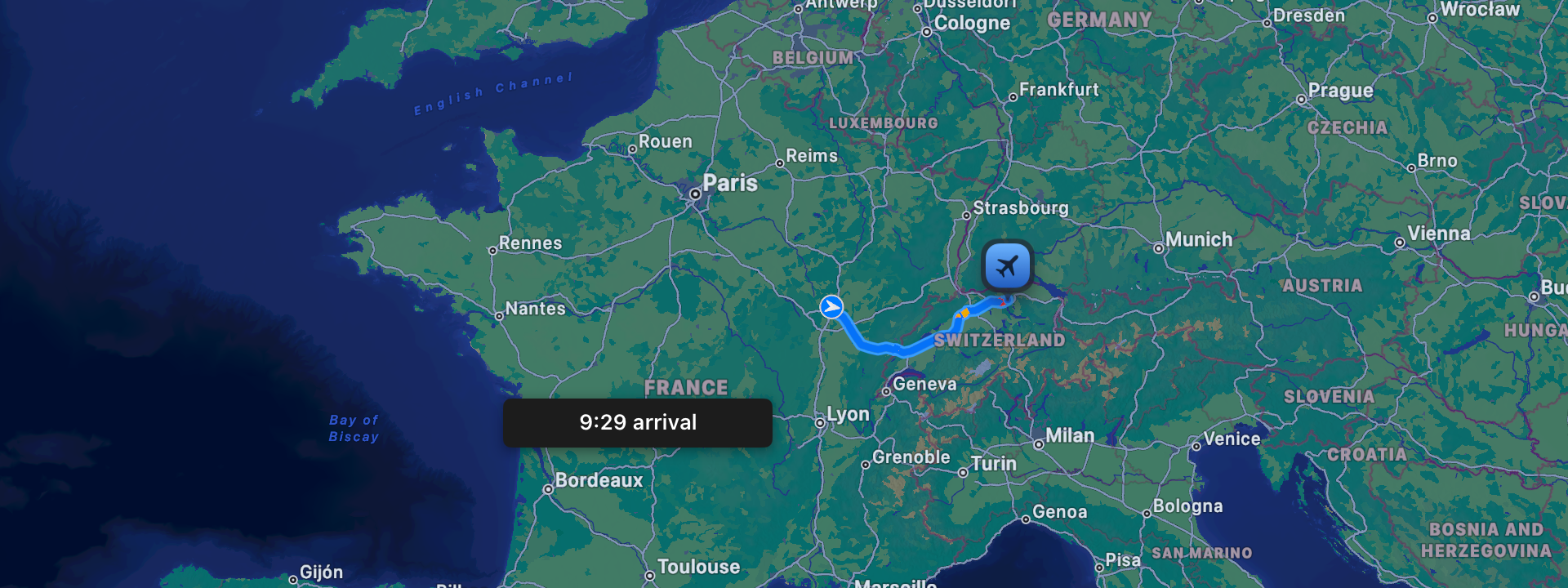

Two months from today I’m running my first half marathon and a few hours later I’m flying to my first WWDC.

-

Tuesday March 18, 2025

Did the Siri AirPods affirmative head nod as I was walking by someone on the street and it was weird.

-

Wednesday February 12, 2025

Swift 6 concurrency migration in progress for Art Museum. One of the biggest areas is refactoring all the CloudKit code from completion handlers to async/await.

-

Thursday January 30, 2025

Submitted a new build of Art Museum to App Review! hope to share it with you soon

-

Thursday November 21, 2024

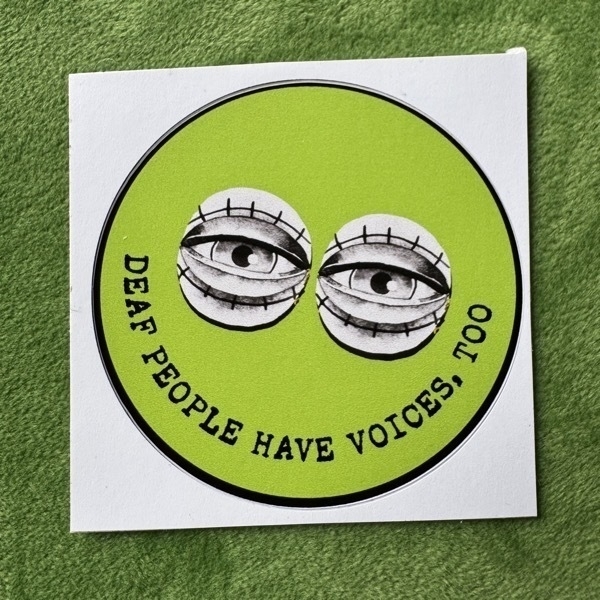

I started learning ASL this week!

-

Tuesday November 19, 2024

Follow thatdeafzinester on IG and explore their zine collection to learn more about audism.

-

Friday November 1, 2024

Working in the KEXP Gathering Space today. Seeing into the studio, I’m feeling inspired that a single human in front of a microphone is reaching people all over the world and helping make their day a little better.

-

Monday October 28, 2024

I wish you could share public read-only iCloud links to Freeform boards.

-

Wednesday October 23, 2024

📺 (via YouTube): Inside Apple's Audio Labs

ABC News’ Rebecca Jarvis visits Apple’s secret audio labs where the company’s engineers have spent years working on technology that can turn a set of AirPods into an FDA-cleared hearing aid.

-

Wednesday October 23, 2024

Could a Mac app with Rogue Amoeba esque permissions keep a timeline history of your sonic alerts/notifications? I’ll often hear a sound and have no idea where it came from.

-

Wednesday October 23, 2024

Been making an effort to see more live music and it’s always so nourishing.

-

Tuesday October 22, 2024

new OS?! cloudOS for server hardware/private cloud compute services

no developer story yet, maybe never, but imagine CloudKit offering serverless compute for 3P apps to run private inference jobs on bigger models

-

Monday October 21, 2024

I think my recent rental car had “next gen” CarPlay. The main display had the usual driving directions. But a secondary route overview was shown in the driver dashboard. Interesting too based on the screenshot, CarPlay provides a full width asset and the Volvo’s OS blended in a portion of it.

-

Monday October 21, 2024

usually a few minutes crawling around on the ground leads to the cat smacked AirPod, but not this time.

-

Friday October 18, 2024

🔊 Two Voice Devs Episode 211 – I guested this week to explore the evolving developer canvas across Siri, Shortcuts, App Intents and Apple Intelligence. With some throwback to how music, YouTube and Alexa led me to now. Two Voice Devs!

-

Such a letdown!

Thursday October 10, 2024Phil Schiller introducing Siri at the iPhone 4S launch event in October 2011.

For decades, technologists have teased us with this dream that you’re gonna be able to talk to technology and it’ll do things for us. Haven’t we seen this before over and over? But it never comes true. We have very limited capability. We just learn a syntax. Call a name, dial a number, play a song. It is such a letdown! What we really want to do is just talk to our device.

-

They're in the AI area.

Thursday October 10, 2024Walt Mossberg and Kara Swisher interviewing Steve Jobs at the All Things D conference in June 2010.

Walt: Last year at our conference we had a small search company called Siri.

Steve: Yeah. Well I don’t know if I would describe Siri as a search company.

Walt: Ok but it’s a search related company… you now own them right?

Steve: Yeah. We bought them.

Kara: Why?

Walt: There was a lot of speculation, well this company is kind of in the search area.

Steve: No, they’re not in the search area.

Walt: What are they in? How would you describe it?

Steve: They’re in the AI area. -

Thursday September 5, 2024

Trying to stay calm.